Frontent Developer3iSeoul, South Korea

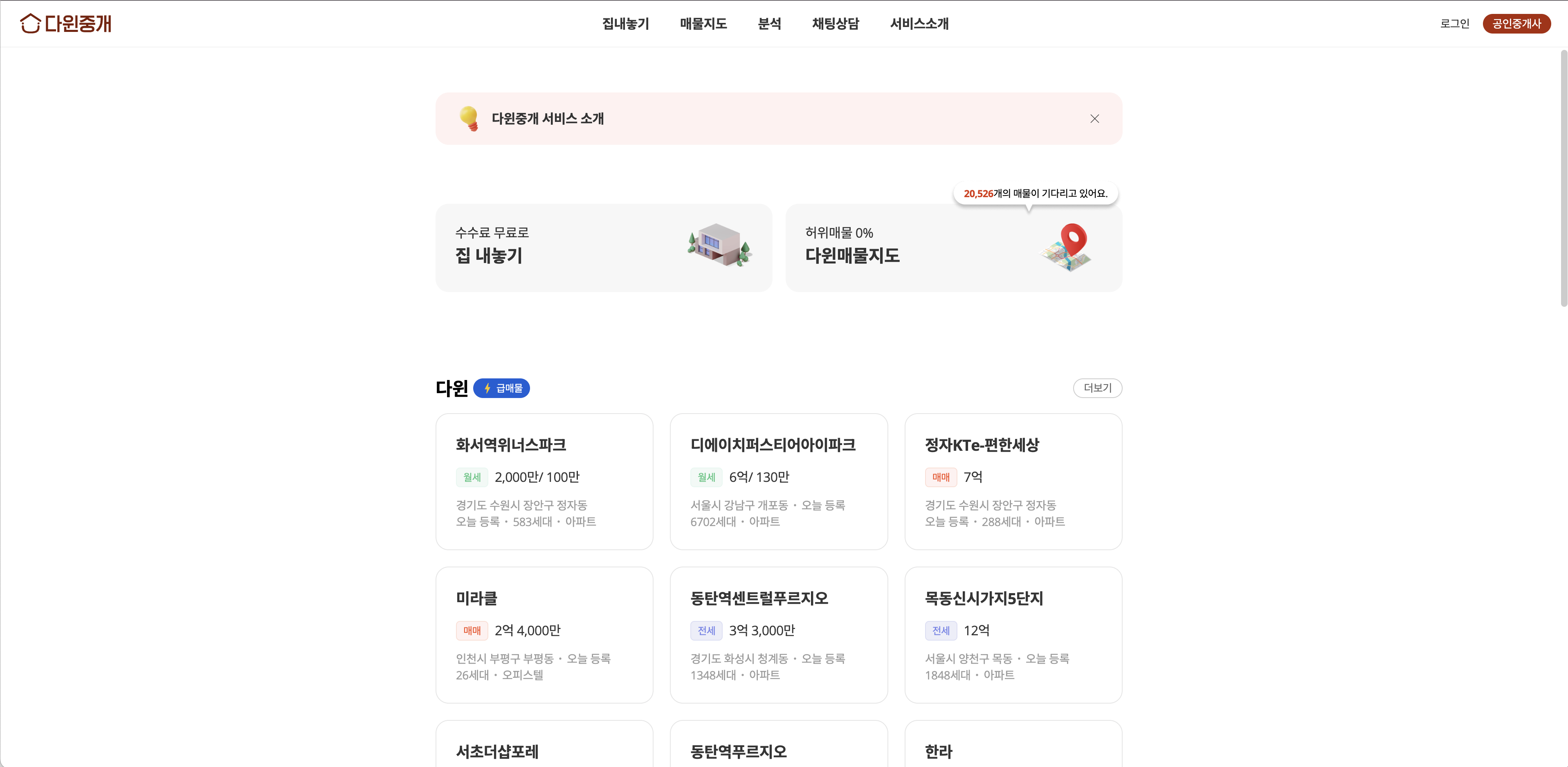

Now that I think about it, the main reason I joined this company was probably because I wanted to experience something new—I knew they were using Vue (no experience) instead of React (what I was most familiar with), and I knew that my job was going to have something to do with WebGL technology, which I had zero idea of what to expect. Well, I've also got to admit that this company—at least from what I'd gathered on their website and the products they were selling—gave a different (in a positive way) vibe, compared to all the Korean companies that I had experienced before. In fact, I was going to take the offer I got from other company, but after the final interview with 3i, I changed my mind almost on a whim.

I guess challenging oneself with new adventures from time to time can be refreshing and rewarding, but this time there was just too much to take on that I was pretty much burned out at the end 😅. The biggest challenge I had was the fact that there was practically no one in the company I could ask for help whenever I got stuck in the code level—all the major devs who built these Vue.js and three.js apps were long gone already by the time I got there. Nor were there any sort of written documentation/wiki about the codebases preserved within the company (I really should've run away at this point, but well I didn't..)

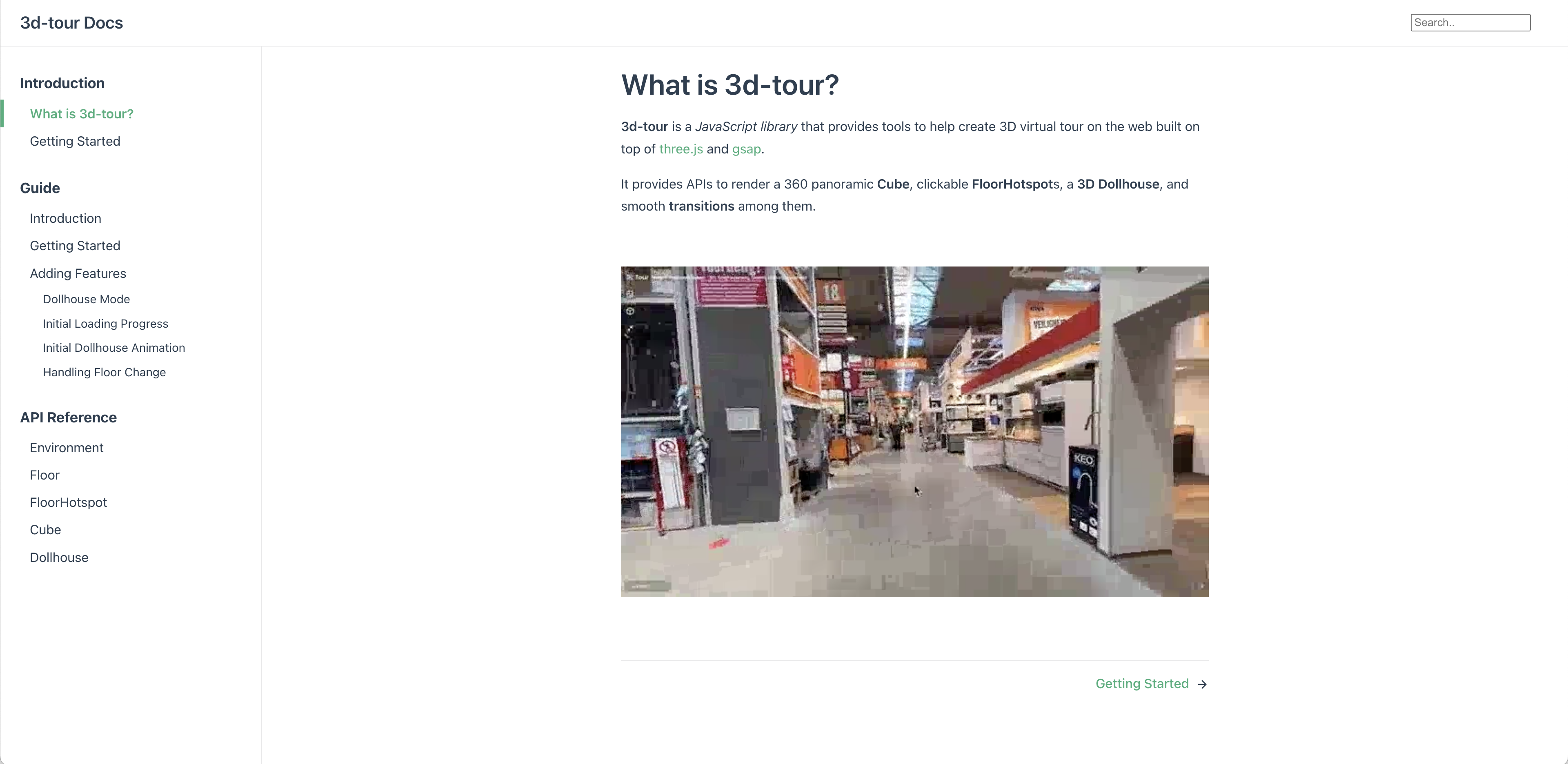

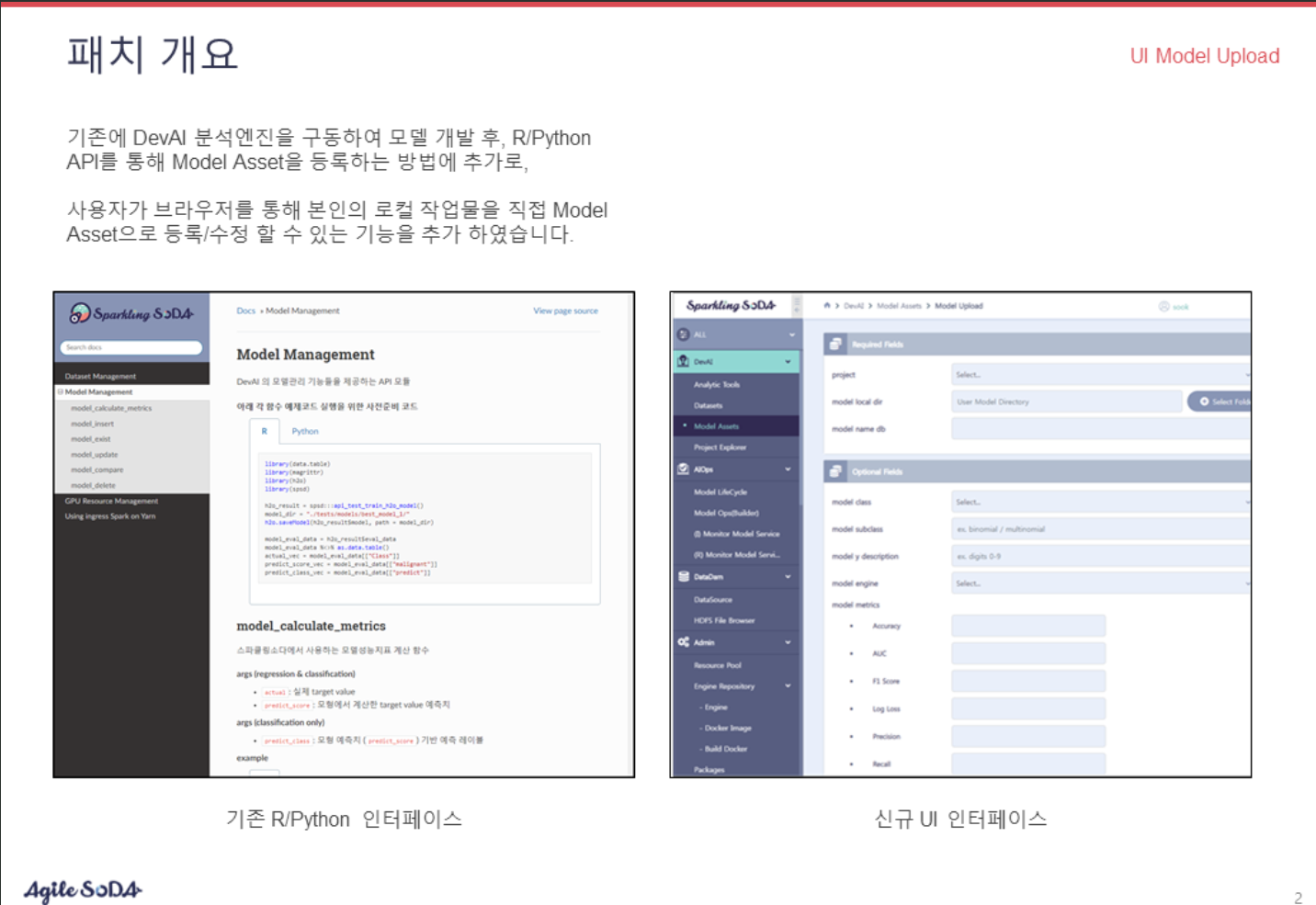

At least I learned a lot about 3D graphics in this period, while working on the complete rewrite of the company's JavaScript 3D engine—something very similar to Google Street View. It was a daunting task that felt impossible at first, but I managed to come up with two(!) versions (v1 & v2) of the rewrite at the end, each accompanied with a fairly thorough documentation—three.js manual and books like Introduction to Computer Graphics helped me grasp the core ideas of computer graphics immensely, and I should also mention Three.js journey helped me organize the structure of the codebase a lot.

Speaking of code structure, it was this time around that I first got interested in design patterns to find the best proven pattern that fit my codebase. For example, I had a bunch of classes that were oblivious to each other's existence, but nevertheless needed to exchange information. So basically I wanted a mechanism to deliver a state change to the target class (from some random classes the target class doesn't know exist) and to subsequently propagate the change in state to those classes that are interested—I think in the end I resorted to copying mrdoob's eventdispatcher.js for the job. What I also learned while dealing with several classes was how tricky it could be to come up with the right level of abstractions—sometimes I exposed too little public APIs for a class to be flexible, and other times I exposed too much such APIs that the consumers of the class took almost complete control over the internals of the class, which inevitably resulted in tight coupling between them. This whole journey of seeking good design patterns and abstractions actually left me wanting to study college level CS education even more than usual—in fact, this was one of the trigger points that made me leave the company and decide to take a break from work altogether for a while.

Apart from 3D graphics and three.js, I also got to experience some web technologies that were new to me. Most notably, it was my first time using Vue (both 2 & 3), first time using TypeScript, first time with rollup, and vite. For the docs, I used VitePress and Docusaurus.

It's weird because I can't really articulate why, but while using Vue—even though I didn't have major issues using them—I found myself craving for React more. I still remember the impression I got while reading (old) React docs. It felt like the documentation was written by very mature and smart computer scientists. For example, when I was reading this section of the docs, I was convinced that whoever wrote this must have known what they were doing; it conveyed such a powerful authority. Well as much as I respect Evan You, I didn't really feel such impression while reading Vue's docs. And I guess it's also the people around React such as Dan Abramov who I respect a lot as an engineer that made me want to come back to using React. All in all, my career at this company had nothing to do with React, but it ended up making me more loyal to it in the end 😄.